Intelligent agent

In artificial intelligence, an intelligent agent is an entity that perceives its environment, takes actions autonomously to achieve goals, and may improve its performance through machine learning or by acquiring knowledge. Leading AI textbooks define artificial intelligence as the "study and design of intelligent agents," emphasizing that goal-directed behavior is central to intelligence.

A specialized subset of intelligent agents, agentic AI (also known as an AI agent or simply agent), expands this concept by proactively pursuing goals, making decisions, and taking actions over extended periods, thereby exemplifying a novel form of digital agency.[1]

Intelligent agents can range from simple to highly complex. A basic thermostat or control system is considered an intelligent agent, as is a human being, or any other system that meets the same criteria—such as a firm, a state, or a biome.[2]

Intelligent agents operate based on an objective function, which encapsulates their goals. They are designed to create and execute plans that maximize the expected value of this function upon completion.[3]

For example, a reinforcement learning agent has a reward function, which allows programmers to shape its desired behavior.[4] Similarly, an evolutionary algorithm's behavior is guided by a fitness function.[5]

Intelligent agents in artificial intelligence are closely related to agents in economics, and versions of the intelligent agent paradigm are studied in cognitive science, ethics, and the philosophy of practical reason, as well as in many interdisciplinary socio-cognitive modeling and computer social simulations.

Intelligent agents are often described schematically as abstract functional systems similar to computer programs. To distinguish theoretical models from real-world implementations, abstract descriptions of intelligent agents are called abstract intelligent agents. Intelligent agents are also closely related to software agents—autonomous computer programs that carry out tasks on behalf of users. They are also referred to using a term borrowed from economics: a "rational agent".[2]

Intelligent Agents as the Foundation of AI

[edit]This section possibly contains original research. (February 2023) |

The concept of intelligent agents provides a foundational lens through which to define and understand artificial intelligence. For instance, the influential textbook Artificial Intelligence: A Modern Approach (Russell & Norvig) describes:

- Agent: Anything that perceives its environment (using sensors) and acts upon it (using actuators). E.g., a robot with cameras and wheels, or a software program that reads data and makes recommendations.

- Rational Agent: An agent that strives to achieve the *best possible outcome* based on its knowledge and past experiences. "Best" is defined by a performance measure – a way of evaluating how well the agent is doing.

- Artificial Intelligence (as a field): The study and creation of these rational agents.

Other researchers and definitions build upon this foundation. Padgham & Winikoff emphasize that intelligent agents should react to changes in their environment in a timely way, proactively pursue goals, and be flexible and robust (able to handle unexpected situations). Some also suggest that ideal agents should be "rational" in the economic sense (making optimal choices) and capable of complex reasoning, like having beliefs, desires, and intentions (BDI model). Kaplan and Haenlein offer a similar definition, focusing on a system's ability to understand external data, learn from that data, and use what is learned to achieve goals through flexible adaptation.

Defining AI in terms of intelligent agents offers several key advantages:

- Avoids Philosophical Debates: It sidesteps arguments about whether AI is "truly" intelligent or conscious, like those raised by the Turing test or Searle's Chinese Room. It focuses on behavior and goal achievement, not on replicating human thought.

- Objective Testing: It provides a clear, scientific way to evaluate AI systems. Researchers can compare different approaches by measuring how well they maximize a specific "goal function" (or objective function). This allows for direct comparison and combination of techniques.

- Interdisciplinary Communication: It creates a common language for AI researchers to collaborate with other fields like mathematical optimization and economics, which also use concepts like "goals" and "rational agents."

Objective Function

[edit]In the context of intelligent agents, an objective function (or goal function) defines the agent's goals. A more intelligent agent is one that consistently takes actions that lead to better outcomes according to its objective function. Essentially, the objective function is a measure of success.

The objective function can be:

- Simple: For example, in a game of Go, the objective function could be 1 for a win and 0 for a loss.

- Complex: It might involve performing actions similar to those that have proven successful in the past, requiring the agent to learn and adapt.

The objective function encompasses all the goals the agent is designed to achieve. For rational agents, it also includes the acceptable trade-offs between potentially conflicting goals. For instance, a self-driving car's objective function might balance safety, speed, and passenger comfort.

Different terms are used to describe this concept, depending on the context. These include:

- Utility function: Often used in economics and decision theory, representing the desirability of a state.

- Objective function: A general term used in optimization.

- Loss function: Typically used in machine learning, where the goal is to minimize the loss (error).

- Reward Function: Used in reinforcement learning.

- Fitness Function: Used in evolutionary systems.

Goals, and therefore the objective function, can be:

- Explicitly defined: Programmed directly into the agent.

- Induced: Learned or evolved over time.

- In reinforcement learning, a "reward function" provides feedback, encouraging desired behaviors and discouraging undesirable ones. The agent learns to maximize its cumulative reward.

- In evolutionary systems, a "fitness function" determines which agents are more likely to reproduce. This is analogous to natural selection, where organisms evolve to maximize their chances of survival and reproduction.[6]

Some AI systems, such as nearest-neighbor, reason by analogy instead of being explicitly goal-driven; however, even these systems can have goals implicitly defined within their training data.[7] Such systems can still be benchmarked if the non-goal system is framed as a system whose "goal" is to accomplish its narrow classification task.[8]

Systems that are not traditionally considered agents, such as knowledge-representation systems, are sometimes subsumed into the paradigm by framing them as agents that have a goal of (for example) answering questions as accurately as possible; the concept of an "action" is here extended to encompass the "act" of giving an answer to a question. As an additional extension, mimicry-driven systems can be framed as agents who are optimizing a "goal function" based on how closely the IA succeeds in mimicking the desired behavior.[3] In the generative adversarial networks of the 2010s, an "encoder"/"generator" component attempts to mimic and improvise human text composition. The generator is attempting to maximize a function encapsulating how well it can fool an antagonistic "predictor"/"discriminator" component.[9]

While symbolic AI systems often accept an explicit goal function, the paradigm can also be applied to neural networks and to evolutionary computing. Reinforcement learning can generate intelligent agents that appear to act in ways intended to maximize a "reward function".[10] Sometimes, rather than setting the reward function to be directly equal to the desired benchmark evaluation function, machine learning programmers will use reward shaping to initially give the machine rewards for incremental progress in learning.[11] Yann LeCun stated in 2018, "Most of the learning algorithms that people have come up with essentially consist of minimizing some objective function."[12] AlphaZero chess had a simple objective function; each win counted as +1 point, and each loss counted as -1 point. An objective function for a self-driving car would have to be more complicated.[13] Evolutionary computing can evolve intelligent agents that appear to act in ways intended to maximize a "fitness function" that influences how many descendants each agent is allowed to leave.[5]

The mathematical formalism of AIXI was proposed as a maximally intelligent agent in this paradigm.[14] However, AIXI is uncomputable. In the real world, an IA is constrained by finite time and hardware resources, and scientists compete to produce algorithms that can achieve progressively higher scores on benchmark tests with existing hardware.[15]

Agent Function

[edit]An intelligent agent's behavior can be described mathematically by an agent function. This function determines what the agent does based on what it has seen.

A percept refers to the agent's sensory inputs at a single point in time. For example, a self-driving car's percepts might include camera images, lidar data, GPS coordinates, and speed readings at a specific instant. The agent uses these percepts, and potentially its history of percepts, to decide on its next action (e.g., accelerate, brake, turn).

The agent function, often denoted as f, maps the agent's entire history of percepts to an action.[16]

Mathematically, this can be represented as:

Where:

- P\* represents the set of all possible percept sequences (the agent's entire perceptual history). The asterisk (*) indicates a sequence of zero or more percepts.

- A represents the set of all possible actions the agent can take.

- f is the agent function that maps a percept sequence to an action.

It's crucial to distinguish between the agent function (an abstract mathematical concept) and the agent program (the concrete implementation of that function).

- The agent function is a theoretical description.

- The agent program is the actual code that runs on the agent. The agent program takes the current percept as input and produces an action as output.

The agent function can incorporate a wide range of decision-making approaches, including:[17]

- Calculating the utility (desirability) of different actions.

- Using logical rules and deduction.

- Employing fuzzy logic.

- Other methods.

Classes of intelligent agents

[edit]Russell and Norvig's classification

[edit]Russell & Norvig (2003) group agents into five classes based on their degree of perceived intelligence and capability:[18]

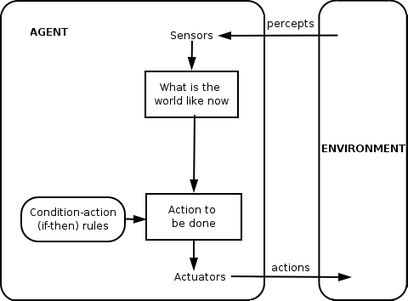

Simple reflex agents

[edit]

Simple reflex agents act only on the basis of the current percept, ignoring the rest of the percept history. The agent function is based on the condition-action rule: "if condition, then action".

This agent function only succeeds when the environment is fully observable. Some reflex agents can also contain information on their current state which allows them to disregard conditions whose actuators are already triggered.

Infinite loops are often unavoidable for simple reflex agents operating in partially observable environments. If the agent can randomize its actions, it may be possible to escape from infinite loops.

Model-based reflex agents

[edit]

A model-based agent can handle partially observable environments. Its current state is stored inside the agent maintaining some kind of structure that describes the part of the world which cannot be seen. This knowledge about "how the world works" is called a model of the world, hence the name "model-based agent".

A model-based reflex agent should maintain some sort of internal model that depends on the percept history and thereby reflects at least some of the unobserved aspects of the current state. Percept history and impact of action on the environment can be determined by using the internal model. It then chooses an action in the same way as reflex agent.

An agent may also use models to describe and predict the behaviors of other agents in the environment.[19]

Goal-based agents

[edit]

Goal-based agents further expand on the capabilities of the model-based agents, by using "goal" information. Goal information describes situations that are desirable. This provides the agent a way to choose among multiple possibilities, selecting the one which reaches a goal state. Search and planning are the subfields of artificial intelligence devoted to finding action sequences that achieve the agent's goals.

Utility-based agents

[edit]

Goal-based agents only distinguish between goal states and non-goal states. It is also possible to define a measure of how desirable a particular state is. This measure can be obtained through the use of a utility function which maps a state to a measure of the utility of the state. A more general performance measure should allow a comparison of different world states according to how well they satisfied the agent's goals. The term utility can be used to describe how "happy" the agent is.

A rational utility-based agent chooses the action that maximizes the expected utility of the action outcomes - that is, what the agent expects to derive, on average, given the probabilities and utilities of each outcome. A utility-based agent has to model and keep track of its environment, tasks that have involved a great deal of research on perception, representation, reasoning, and learning.

Learning agents

[edit]

Learning lets agents begin in unknown environments and gradually surpass the bounds of their initial knowledge. A key distinction in such agents is the separation between a "learning element," responsible for improving performance, and a "performance element," responsible for choosing external actions.

The learning element gathers feedback from a "critic" to assess the agent’s performance and decides how the performance element—also called the "actor"—can be adjusted to yield better outcomes. The performance element, once considered the entire agent, interprets percepts and takes actions.

The final component, the "problem generator," suggests new and informative experiences that encourage exploration and further improvement.

Weiss's classification

[edit]According to Weiss (2013), agents can be categorized into four classes:

- Logic-based agents, where decisions about actions are derived through logical deduction.

- Reactive agents, where decisions occur through a direct mapping from situation to action.

- Belief–desire–intention agents, where decisions depend on manipulating data structures that represent the agent's beliefs, desires, and intentions.

- Layered architectures, where decision-making takes place across multiple software layers, each of which reasons about the environment at a different level of abstraction.

Other

[edit]In 2013, Alexander Wissner-Gross published a theory exploring the relationship between Freedom and Intelligence in intelligent agents.[20][21]

Hierarchies of agents

[edit]Intelligent agents can be organized hierarchically into multiple "sub-agents". Intelligent sub-agents process and perform lower-level functions. Taken together, the intelligent agent and sub-agents create a complete system that can accomplish difficult tasks or goals with behaviors and responses that display a form of intelligence.

Generally, an agent can be constructed by separating the body into the sensors and actuators, and so that it operates with a complex perception system that takes the description of the world as input for a controller and outputs commands to the actuator. However, a hierarchy of controller layers is often necessary to balance the immediate reaction desired for low-level tasks and the slow reasoning about complex, high-level goals.[22]

Alternative definitions and uses

[edit]"Intelligent agent" is also often used as a vague term, sometimes synonymous with "virtual personal assistant".[23] Some 20th-century definitions characterize an agent as a program that aids a user or that acts on behalf of a user.[24] These examples are known as software agents, and sometimes an "intelligent software agent" (that is, a software agent with intelligence) is referred to as an "intelligent agent".

According to Nikola Kasabov, IA systems should exhibit the following characteristics:[25]

- Accommodate new problem solving rules incrementally.

- Adapt online and in real time.

- Are able to analyze themselves in terms of behavior, error and success.

- Learn and improve through interaction with the environment (embodiment).

- Learn quickly from large amounts of data.

- Have memory-based exemplar storage and retrieval capacities.

- Have parameters to represent short- and long-term memory, age, forgetting, etc.

In the context of generative artificial intelligence, AI agents[26] (also known as compound AI systems,[26] agentic AI,[27][28][29] large action models,[27] or large agent models[27]) are agents defined by a spectrum of attributes: complexity of their environment, complexity of their goals, a user interface based on natural language, capability of acting independently from user supervision, the use of software tools or planning, and a control flow based on large language models.[26] A common hypothetical use case is automatically booking travel plans based on a prompted request.[27][28][29][30][31][32][33] Examples of AI agents include Devin AI, AutoGPT, and SIMA.[34] Examples of AI agent frameworks include LangChain,[35][36] Microsoft AutoGen[36] and OpenAI Swarm.[37]

Proposed benefits include increased personal and economic productivity,[31][28] more innovation,[33] and liberation from tedious work.[29][33] Potential concerns include liability,[31][28][29] cybercrime,[31][27] philosophical ethical considerations,[31] AI safety[31] and AI alignment,[27][29] data privacy,[30][27][38][39][40] weakening of human oversight,[30][31][27][28][38] algorithmic bias,[30][41] compounding software errors,[27][34] the compounding effect of existing concerns about artificial intelligence,[27] unpredictability,[27][38] difficulty explaining decisions made by an agentic system,[27][38] security vulnerabilities,[27][28][39][41] underemployment,[41] job displacement,[28][41] manipulation of users,[38][42] how to adapt legal systems to agents,[29][40] hallucinations,[32][41] difficulty counteracting agents,[38] the lack of a framework to identify and manage agents,[38] reward hacking,[38] targeted harassment,[38] brand dilution,[40] promotion of AI slop,[43] impact on Internet traffic,[40] high environmental impact,[40] training data based on copyrighted content,[40] lower time efficiency in practice,[40] high cost of use,[26][40] overfitting of benchmark datasets,[26] and the lack of standardization in or reproducibility of agent evaluation frameworks.[26][38]

Applications

[edit]This section may lend undue weight to certain ideas, incidents, or controversies. Please help to create a more balanced presentation. Discuss and resolve this issue before removing this message. (September 2023) |

Hallerbach et al. explored the use of agent-based approaches for developing and validating automated driving systems. Their method involved a digital twin of the vehicle under test and microscopic traffic simulations using independent agents.[44]

Waymo has developed a multi-agent simulation environment, **Carcraft**, to test algorithms for self-driving cars.[45][46] This system simulates interactions between human drivers, pedestrians, and automated vehicles. Artificial agents replicate human behavior using real-world data.

The concept of agent-based modeling for self-driving cars was discussed as early as 2003.[47]

See also

[edit]- Ambient intelligence

- Artificial conversational entity

- Artificial intelligence systems integration

- Autonomous agent

- Cognitive architectures

- Cognitive radio – a practical field for implementation

- Cybernetics

- DAYDREAMER

- Embodied agent

- Federated search – the ability for agents to search heterogeneous data sources using a single vocabulary

- Friendly artificial intelligence

- Fuzzy agents – IA implemented with adaptive fuzzy logic

- GOAL agent programming language

- Hybrid intelligent system

- Intelligent control

- Intelligent system

- JACK Intelligent Agents

- Multi-agent system and multiple-agent system – multiple interactive agents

- Reinforcement learning

- Semantic Web – making data on the Web available for automated processing by agents

- Social simulation

- Software agent

- Software bot

Notes

[edit]Inline references

[edit]- ^ Mukherjee, Anirban; Chang, Hannah (2025-02-01). "Agentic AI: Expanding the Algorithmic Frontier of Creative Problem Solving". SSRN 5123621.

- ^ a b Russell & Norvig 2003, chpt. 2.

- ^ a b Bringsjord, Selmer; Govindarajulu, Naveen Sundar (12 July 2018). "Artificial Intelligence". In Edward N. Zalta (ed.). The Stanford Encyclopedia of Philosophy (Summer 2020 Edition).

- ^ Wolchover, Natalie (30 January 2020). "Artificial Intelligence Will Do What We Ask. That's a Problem". Quanta Magazine. Retrieved 21 June 2020.

- ^ a b Bull, Larry (1999). "On model-based evolutionary computation". Soft Computing. 3 (2): 76–82. doi:10.1007/s005000050055. S2CID 9699920.

- ^ Domingos 2015, Chapter 5.

- ^ Domingos 2015, Chapter 7.

- ^ Lindenbaum, M., Markovitch, S., & Rusakov, D. (2004). Selective sampling for nearest neighbor classifiers. Machine learning, 54(2), 125–152.

- ^ "Generative adversarial networks: What GANs are and how they've evolved". VentureBeat. 26 December 2019. Retrieved 18 June 2020.

- ^ Wolchover, Natalie (January 2020). "Artificial Intelligence Will Do What We Ask. That's a Problem". Quanta Magazine. Retrieved 18 June 2020.

- ^ Andrew Y. Ng, Daishi Harada, and Stuart Russell. "Policy invariance under reward transformations: Theory and application to reward shaping." In ICML, vol. 99, pp. 278-287. 1999.

- ^ Martin Ford. Architects of Intelligence: The truth about AI from the people building it. Packt Publishing Ltd, 2018.

- ^ "Why AlphaZero's Artificial Intelligence Has Trouble With the Real World". Quanta Magazine. 2018. Retrieved 18 June 2020.

- ^ Adams, Sam; Arel, Itmar; Bach, Joscha; Coop, Robert; Furlan, Rod; Goertzel, Ben; Hall, J. Storrs; Samsonovich, Alexei; Scheutz, Matthias; Schlesinger, Matthew; Shapiro, Stuart C.; Sowa, John (15 March 2012). "Mapping the Landscape of Human-Level Artificial General Intelligence". AI Magazine. 33 (1): 25. doi:10.1609/aimag.v33i1.2322.

- ^ Hutson, Matthew (27 May 2020). "Eye-catching advances in some AI fields are not real". Science | AAAS. Retrieved 18 June 2020.

- ^ Russell & Norvig 2003, p. 33

- ^ Salamon, Tomas (2011). Design of Agent-Based Models. Repin: Bruckner Publishing. pp. 42–59. ISBN 978-80-904661-1-1.

- ^ Russell & Norvig 2003, pp. 46–54

- ^ Stefano Albrecht and Peter Stone (2018). Autonomous Agents Modelling Other Agents: A Comprehensive Survey and Open Problems. Artificial Intelligence, Vol. 258, pp. 66-95. https://doi.org/10.1016/j.artint.2018.01.002

- ^ Box, Geeks out of the (2019-12-04). "A Universal Formula for Intelligence". Geeks out of the box. Retrieved 2022-10-11.

- ^ Wissner-Gross, A. D.; Freer, C. E. (2013-04-19). "Causal Entropic Forces". Physical Review Letters. 110 (16): 168702. Bibcode:2013PhRvL.110p8702W. doi:10.1103/PhysRevLett.110.168702. hdl:1721.1/79750. PMID 23679649.

- ^ Poole, David; Mackworth, Alan. "1.3 Agents Situated in Environments‣ Chapter 2 Agent Architectures and Hierarchical Control‣ Artificial Intelligence: Foundations of Computational Agents, 2nd Edition". artint.info. Retrieved 28 November 2018.

- ^ Fingar, Peter (2018). "Competing For The Future With Intelligent Agents... And A Confession". Forbes Sites. Retrieved 18 June 2020.

- ^ Burgin, Mark, and Gordana Dodig-Crnkovic. "A systematic approach to artificial agents." arXiv preprint arXiv:0902.3513 (2009).

- ^ Kasabov 1998.

- ^ a b c d e f Kapoor, Sayash; Stroebl, Benedikt; Siegel, Zachary S.; Nadgir, Nitya; Narayanan, Arvind (2024-07-01), AI Agents That Matter, arXiv:2407.01502, retrieved 2025-01-14

- ^ a b c d e f g h i j k l m "AI Agents: The Next Generation of Artificial Intelligence". The National Law Review. 2024-12-30. Archived from the original on 2025-01-11. Retrieved 2025-01-14.

- ^ a b c d e f g "What are the risks and benefits of 'AI agents'?". World Economic Forum. 2024-12-16. Archived from the original on 2024-12-28. Retrieved 2025-01-14.

- ^ a b c d e f Wright, Webb (2024-12-12). "AI Agents with More Autonomy Than Chatbots Are Coming. Some Safety Experts Are Worried". Scientific American. Archived from the original on 2024-12-23. Retrieved 2025-01-14.

- ^ a b c d O'Neill, Brian (2024-12-18). "What is an AI agent? A computer scientist explains the next wave of artificial intelligence tools". The Conversation. Archived from the original on 2025-01-04. Retrieved 2025-01-14.

- ^ a b c d e f g Piper, Kelsey (2024-03-29). "AI "agents" could do real work in the real world. That might not be a good thing". Vox. Archived from the original on 2024-12-19. Retrieved 2025-01-14.

- ^ a b "What are AI agents?". MIT Technology Review. 2024-07-05. Archived from the original on 2024-07-05. Retrieved 2025-01-14.

- ^ a b c Purdy, Mark (2024-12-12). "What Is Agentic AI, and How Will It Change Work?". Harvard Business Review. ISSN 0017-8012. Archived from the original on 2024-12-30. Retrieved 2025-01-20.

- ^ a b Knight, Will (2024-03-14). "Forget Chatbots. AI Agents Are the Future". Wired. ISSN 1059-1028. Archived from the original on 2025-01-05. Retrieved 2025-01-14.

- ^ David, Emilia (2024-12-30). "Why 2025 will be the year of AI orchestration". VentureBeat. Archived from the original on 2024-12-30. Retrieved 2025-01-14.

- ^ a b Dickson, Ben (2023-10-03). "Microsoft's AutoGen framework allows multiple AI agents to talk to each other and complete your tasks". VentureBeat. Archived from the original on 2024-12-27. Retrieved 2025-01-14.

- ^ "The next AI wave — agents — should come with warning labels". Computerworld. 2025-01-13. Archived from the original on 2025-01-14. Retrieved 2025-01-14.

- ^ a b c d e f g h i j Zittrain, Jonathan L. (2024-07-02). "We Need to Control AI Agents Now". The Atlantic. Archived from the original on 2024-12-31. Retrieved 2025-01-20.

- ^ a b Kerner, Sean Michael (2025-01-16). "Nvidia tackles agentic AI safety and security with new NeMo Guardrails NIMs". VentureBeat. Archived from the original on 2025-01-16. Retrieved 2025-01-20.

- ^ a b c d e f g h "The argument against AI agents and unnecessary automation". The Register. 2025-01-27. Archived from the original on 2025-01-27. Retrieved 2025-01-30.

- ^ a b c d e Lin, Belle (2025-01-06). "How Are Companies Using AI Agents? Here's a Look at Five Early Users of the Bots". The Wall Street Journal. ISSN 0099-9660. Archived from the original on 2025-01-06. Retrieved 2025-01-20.

- ^ Crawford, Kate (2024-12-23). "AI Agents Will Be Manipulation Engines". Wired. ISSN 1059-1028. Archived from the original on 2025-01-03. Retrieved 2025-01-14.

- ^ Maxwell, Thomas (2025-01-10). "Oh No, This Startup Is Using AI Agents to Flood Reddit With Marketing Slop". Gizmodo. Archived from the original on 2025-01-12. Retrieved 2025-01-20.

- ^ Hallerbach, S.; Xia, Y.; Eberle, U.; Koester, F. (2018). "Simulation-Based Identification of Critical Scenarios for Cooperative and Automated Vehicles". SAE International Journal of Connected and Automated Vehicles. 1 (2). SAE International: 93. doi:10.4271/2018-01-1066.

- ^ Madrigal, Story by Alexis C. "Inside Waymo's Secret World for Training Self-Driving Cars". The Atlantic. Retrieved 14 August 2020.

- ^ Connors, J.; Graham, S.; Mailloux, L. (2018). "Cyber Synthetic Modeling for Vehicle-to-Vehicle Applications". In International Conference on Cyber Warfare and Security. Academic Conferences International Limited: 594-XI.

- ^ Yang, Guoqing; Wu, Zhaohui; Li, Xiumei; Chen, Wei (2003). "SVE: embedded agent-based smart vehicle environment". Proceedings of the 2003 IEEE International Conference on Intelligent Transportation Systems. Vol. 2. pp. 1745–1749. doi:10.1109/ITSC.2003.1252782. ISBN 0-7803-8125-4. S2CID 110177067.

Other references

[edit]- Domingos, Pedro (September 22, 2015). The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World. Basic Books. ISBN 978-0465065707.

- Russell, Stuart J.; Norvig, Peter (2003). Artificial Intelligence: A Modern Approach (2nd ed.). Upper Saddle River, New Jersey: Prentice Hall. Chapter 2. ISBN 0-13-790395-2.

- Kasabov, N. (1998). "Introduction: Hybrid intelligent adaptive systems". International Journal of Intelligent Systems. 13 (6): 453–454. doi:10.1002/(SICI)1098-111X(199806)13:6<453::AID-INT1>3.0.CO;2-K. S2CID 120318478.

- Weiss, G. (2013). Multiagent systems (2nd ed.). Cambridge, MA: MIT Press. ISBN 978-0-262-01889-0.